Analyze Dynamics 365 Finance / SCM Data in Synapse (Video)

20. February 2024 Leave a comment

Synapse link for Dataverse is the replacement for Export to Datalake which has been deprecated in 2023. Although it is called link for Dataverse, it can be used to access Dynamics 365 Finance and Supply Chain tables (and CE data from dataverse as well).

SQL Track Changes has to be enabled in D365 F/SCM. Creates, Updates and Deletes are written to a storage account in CSV format. Synapse is running a spark pool that converts the CSVs into Deltalake format which relies on the (Open-Standard) Parquet format. As result you can see and query the F/SCM tables in the lake like it were tables in a relational database.

Good news, Synpase has a serverless SQL pool and a public SQL endpoint. You can find the SQL endpoint from the Manage Icon (down left) > SQL Pools > Select your pool > Workspace SQL Endpoint.

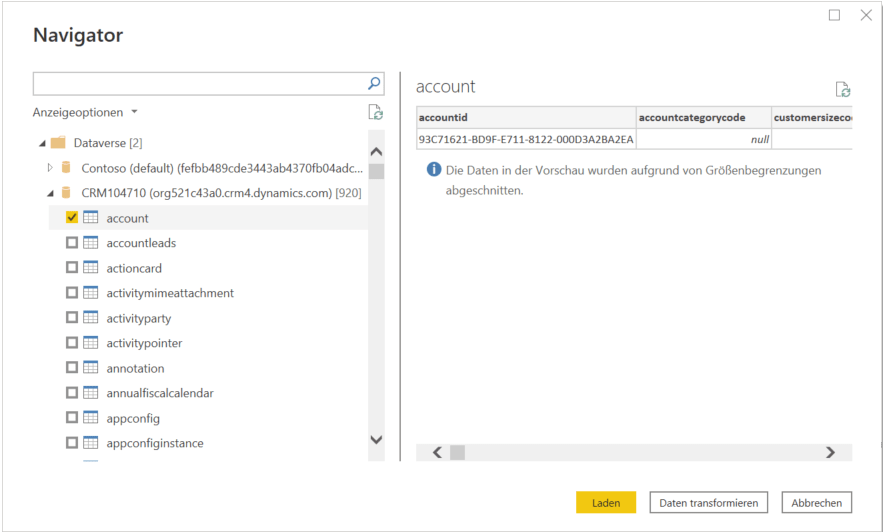

You can create databases in this SQL pool and create views based on the tables in the datalake. For example join CustTable and DirPartyTable and provide a view that contains customer data with a name and address.

You can use the development workspace in Synapse web based workspace but you could also use other tools to connect e.g. SQL Server Management Studio. Tables in the datalake and views in another database can be accessed.

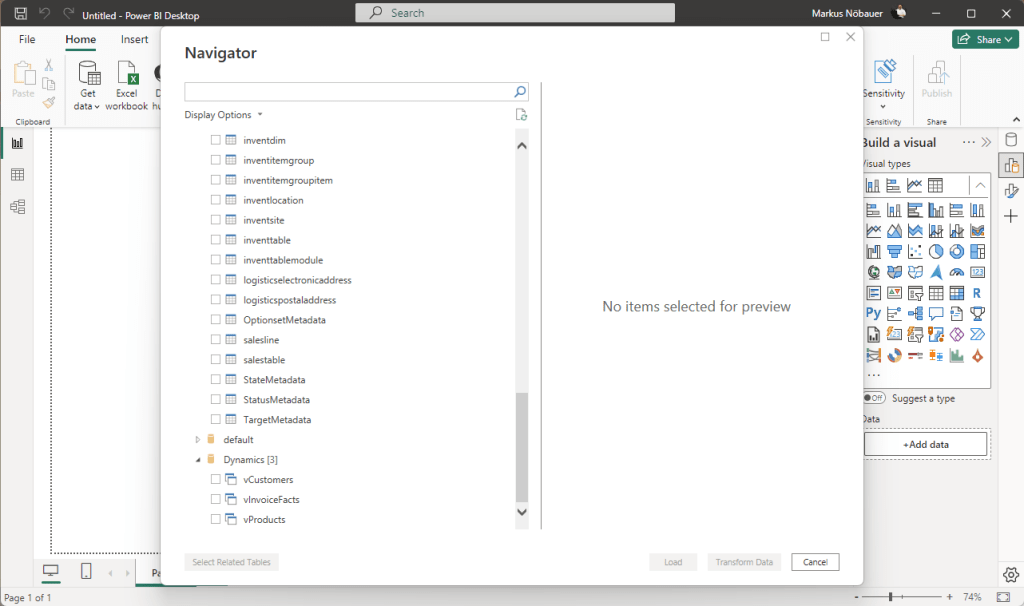

PowerBI has a built-in connector for Synapse Workspace. You can easily load data from the tables and also from the views.