Order Change: Notify customers using Business Events and Flow

15. January 2025 Leave a comment

The combination of Dynamics 365 Finance and Supply Chain Management Cloud ERP and Microsoft Flow helps to automate many tasks. For example, you can easily notify a customer about changes regarding the confirmed shipping date.

Concept

- Configure a Blob Storage Endpoint for Business Events

- Create a Change Based alert for ShippingDateConfirmed

- Add a flow that triggers on new events

- Read Email from sales order and notify customer

Configure a Business Event Endpoint

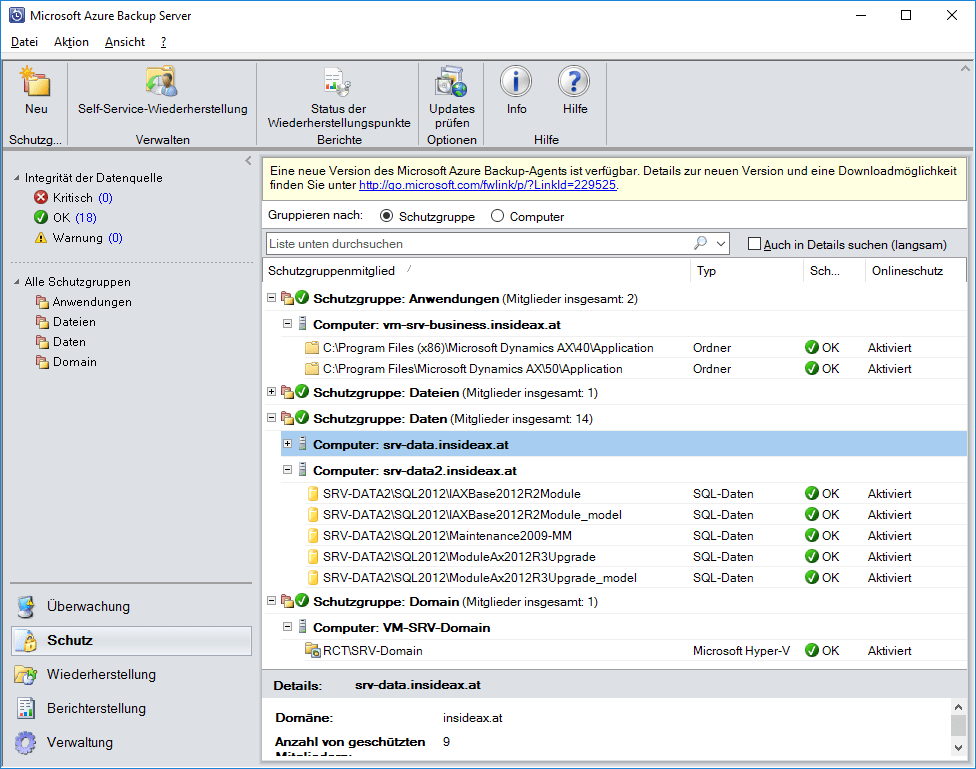

Business events in Dynamics 365 FSCM can be used to notify external systems. It supports different endpoint types like Azure Event Hub, HTTPS web hook and Blob Storage Account. I personally prefer to use a storage account because it’s a very cheap and easy to use, understand and support cloud infrastructure.

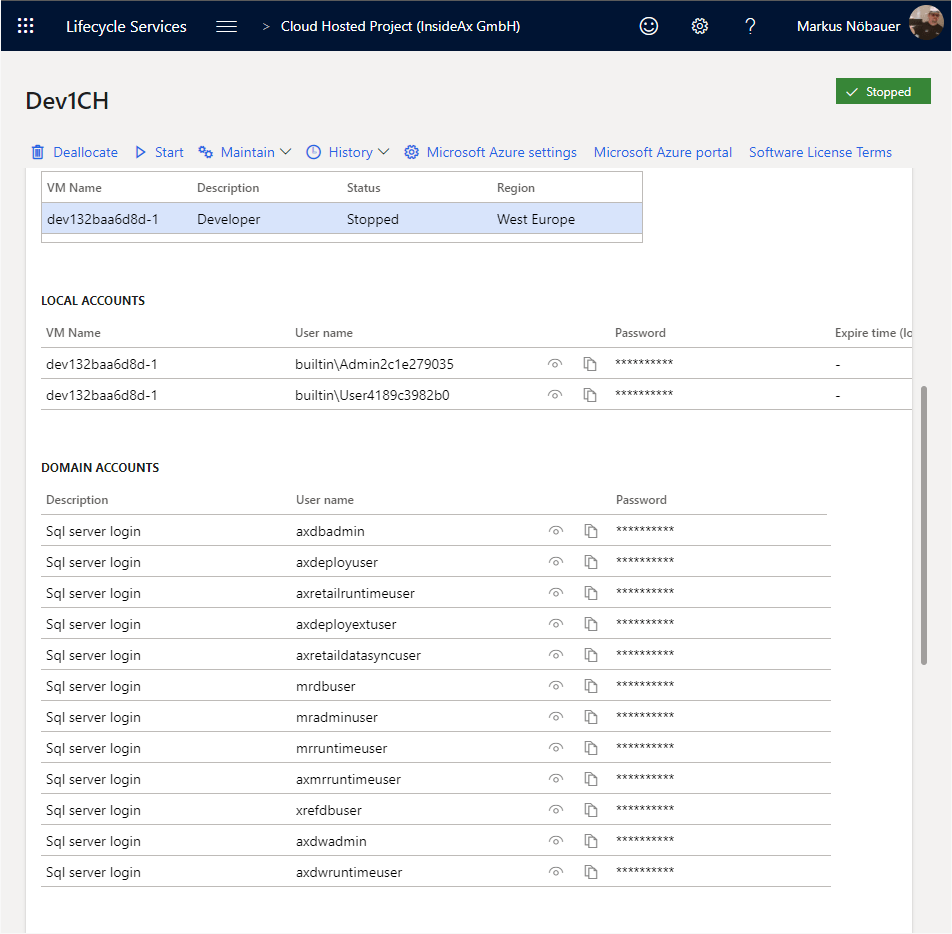

In Entra ID admin Portal (Azure Active Directory) create a new app registration. Note the client ID and create a new secret. Note the application secret as well.

In order to use a Blob Container for Business Events you need some resources. First, of course a storage account with a public endpoint. Copy the Storage Account Connection String. On the menu on the left side select Storage browser. Navigate to the Blob Storage and create a new container.

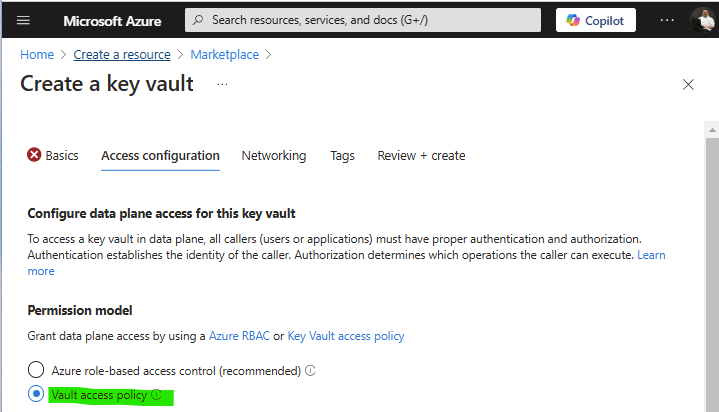

Next, create a key vault to store the connection string. When creating the key vault make sure to use Vault Access Policies. At the key vault create a new secret and place the connection string there.

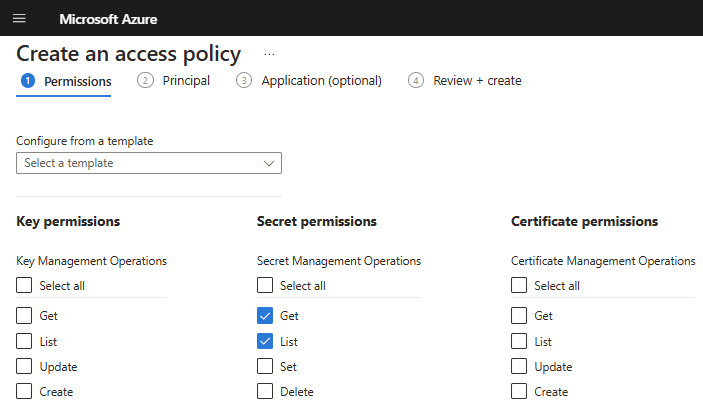

In the Key Vault switch to Access Policies and create a new one. Assign the registered app the rights to list and get secrets.

In Dynamics 365 Finance and Supply Chain Management open the Business Event Catalog (System Administration > Setup > Business Events). Switch to the Enpoint tab and create a new Blob Endpoint. In the dialog provide

- a meaningful name

- the name of the Blob container

- client ID from the app registration

- client secret

- the key vaults URI (from the key vaults overview pane)

e.g. https://yourkeyvault.vault.azure.net/ - the name of the secret that holds the connection string

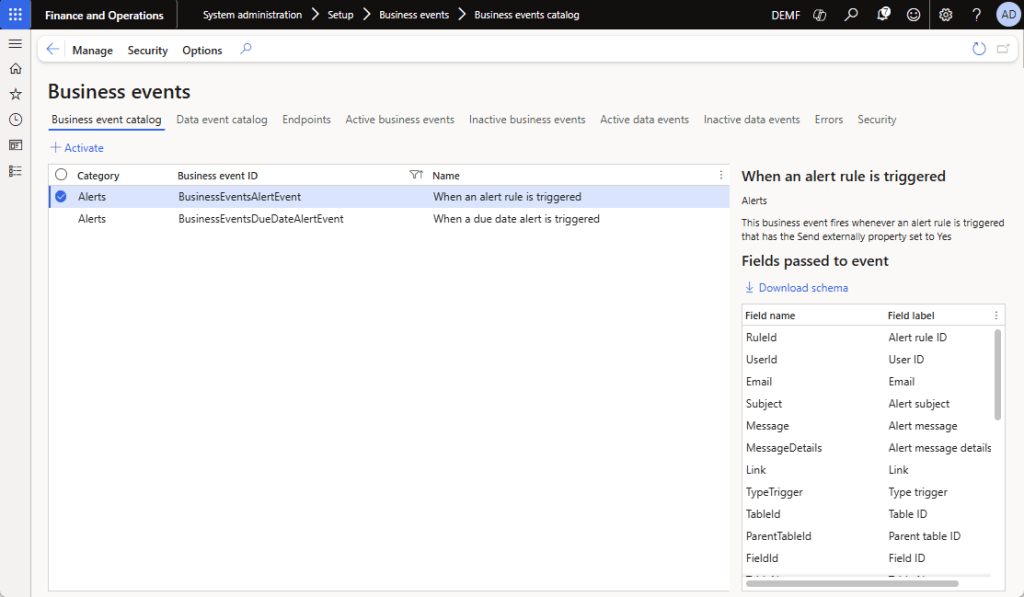

Switch to the Business Event Catalog and filter the Business Event ID entries containing the term “Alert”. Make sure you select the BusinessEventsAlertEvent and click on Activate. In the dialog select the legal entity and the recently created blob endpoint.

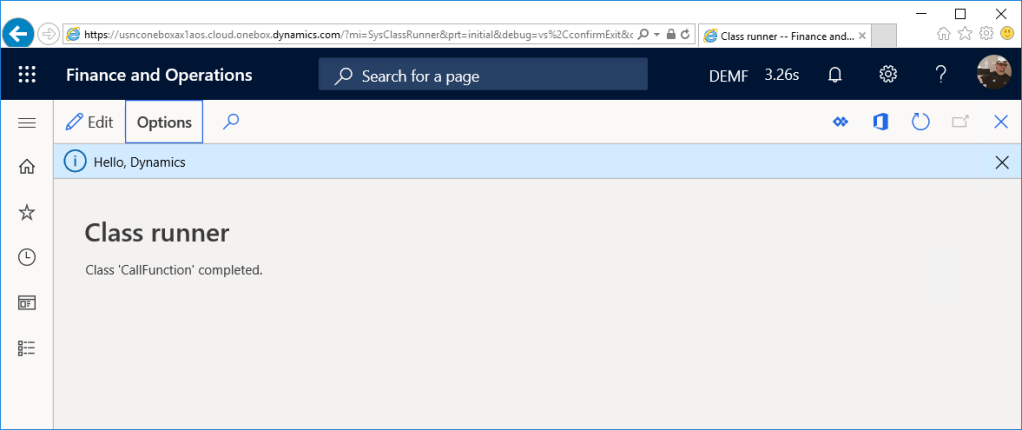

Test Business Event Endpoint configuration for Alerts

Make sure you have a batch job handling change based alerts in Dynamics 365 Finance and Supply Chain. If you don’t have such a batch job, create on from System Administration > Periodic Tasks > Alerts > Change Based Alerts. Change the recurrence to no end date and provide a time interval e.g 10 minutes.

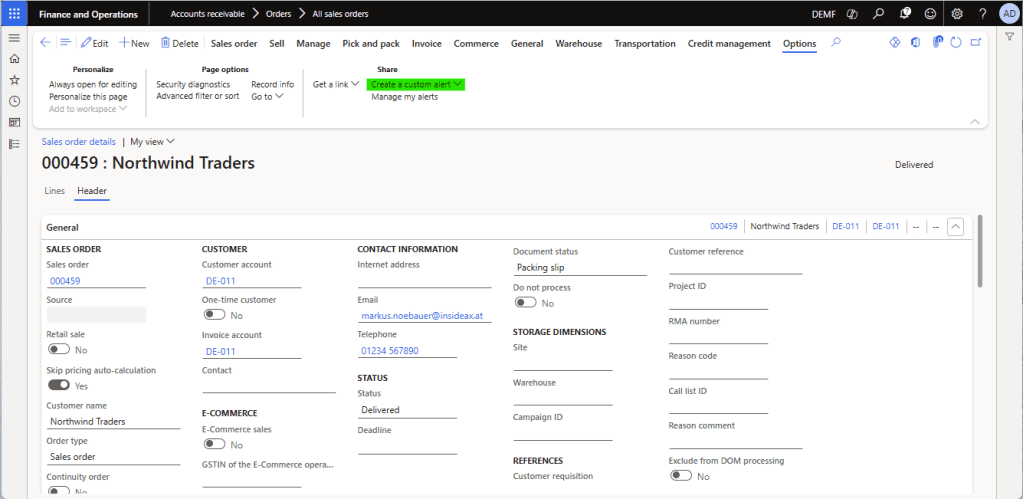

In Dynamics 365 FSCM go to an existing sales order or create one. In the top menu switch to Options and select Create custom alert.

In the alert dialog choose the Confirmed Ship Date from the field drop down. This will change the alert trigger to Has Changed. Make sure to activate the Send Externally Option as well. Save the alert rule.

Change the confirmed ship date in the sales order. Depending on the time interval for change based alerts batch job you will get notified that the value has been changed.

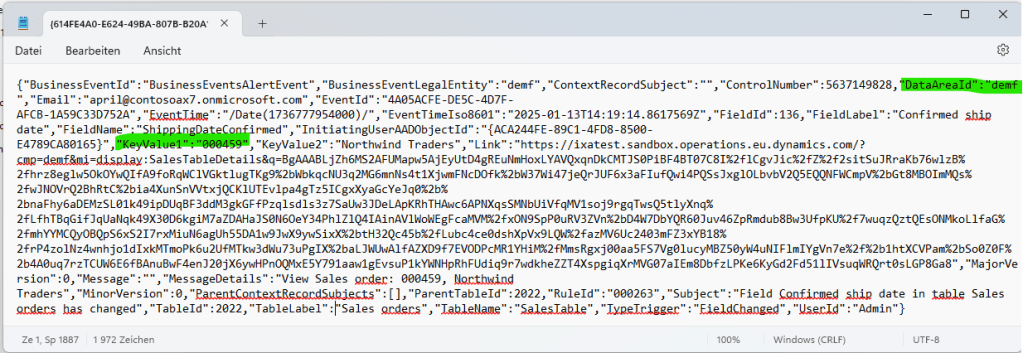

Switch to the Azure Portal and go to your storage account. From the Storage Browser, select the Blob Storage and the container you created for the business events. There you should see at least one entry named as GUID.

Download and open the file in a text editor. I should contain the JSON for the business event. You will find the Sales Order Number in KeyValue1 and the legal entity in the DataAreaId property. You can use this values to lookup the sales order in D365.

Create a flow to notify the customer

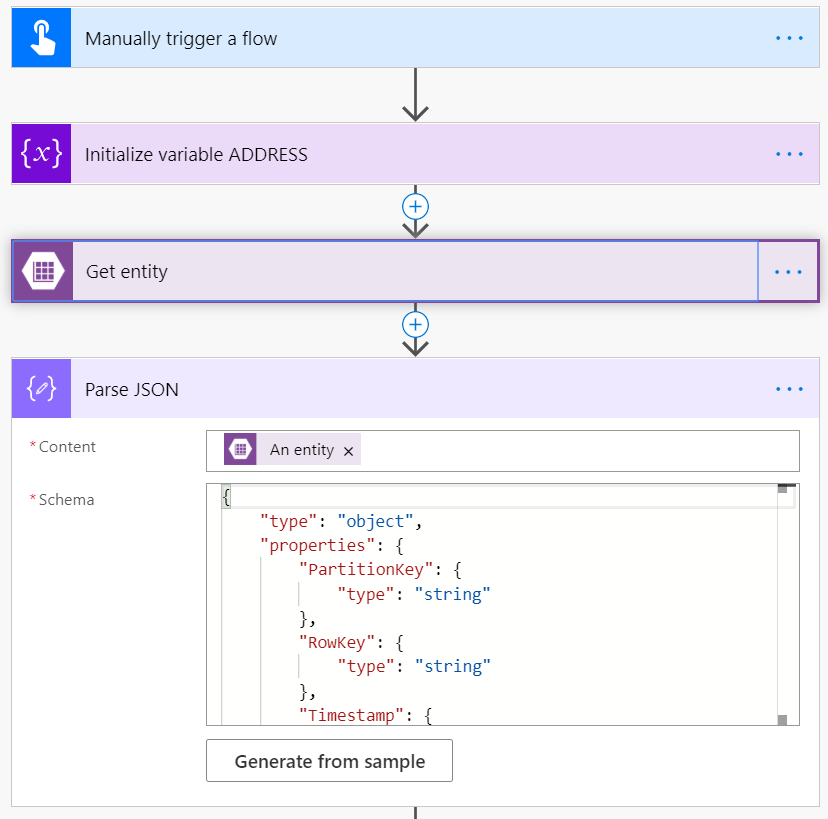

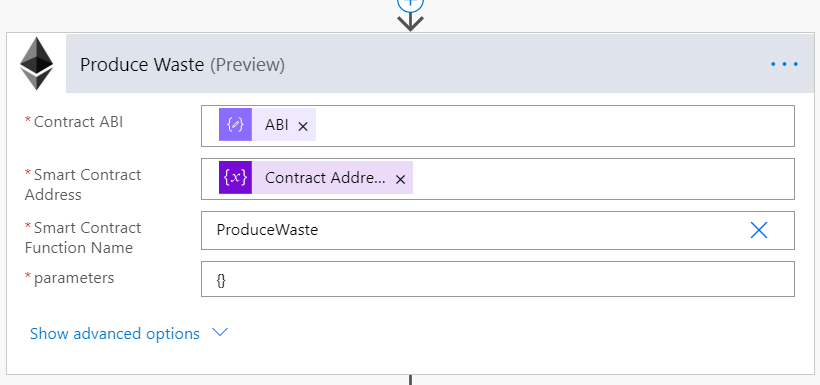

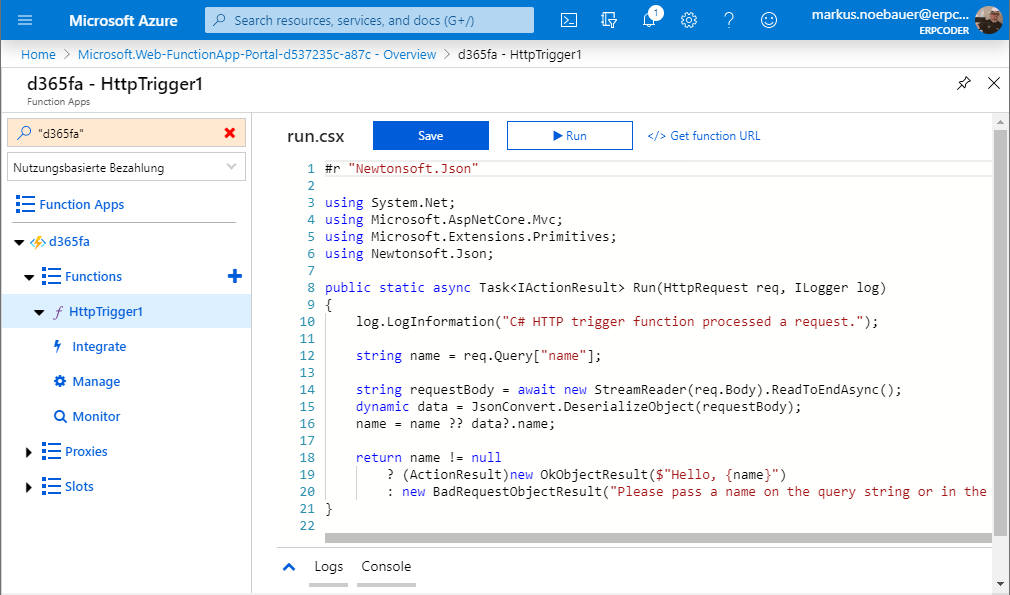

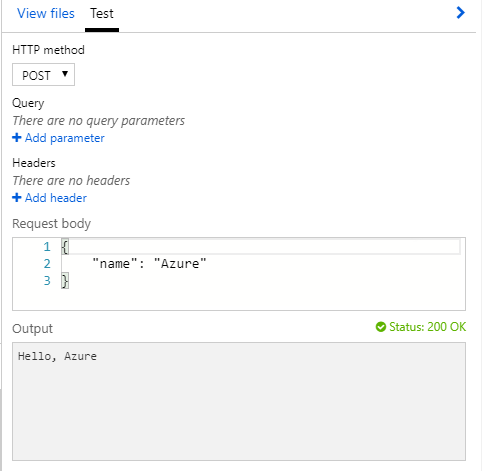

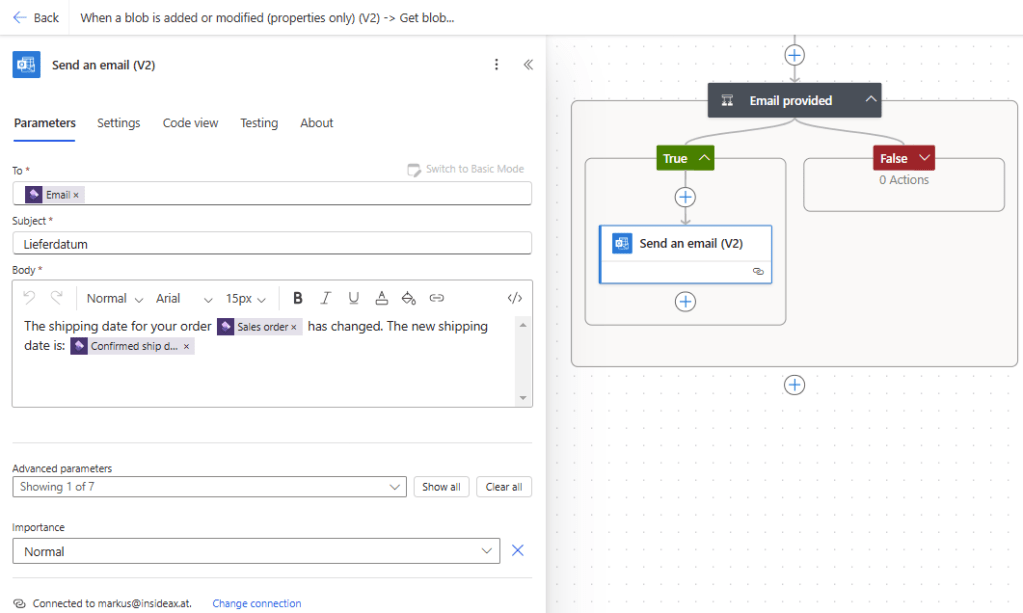

Go to Power Automate and create a new flow that triggers when a blob entry is created or modified. Check if the event was a shipping data change and send an email to the customer. The flow may look like this.

The first action Get Blob Content is used to download the event file itself. The next action will parse the event JSON string. Since the blob file has no file extension, it is necessary to provide the content as string() to the Parser. The schema can be generated by example. E.g. copy the JSON string from the test file and flow will generate the schema.

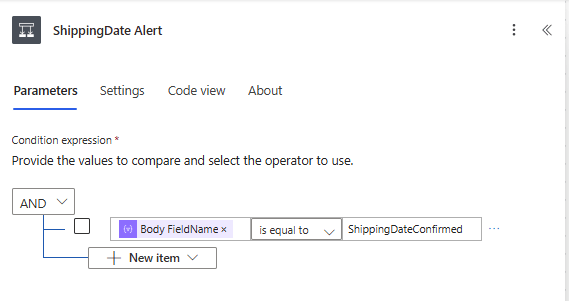

Because the blob storage account may be used by different business events in the future it is advised to add a condition to check if the alert is triggered by the shipping date.

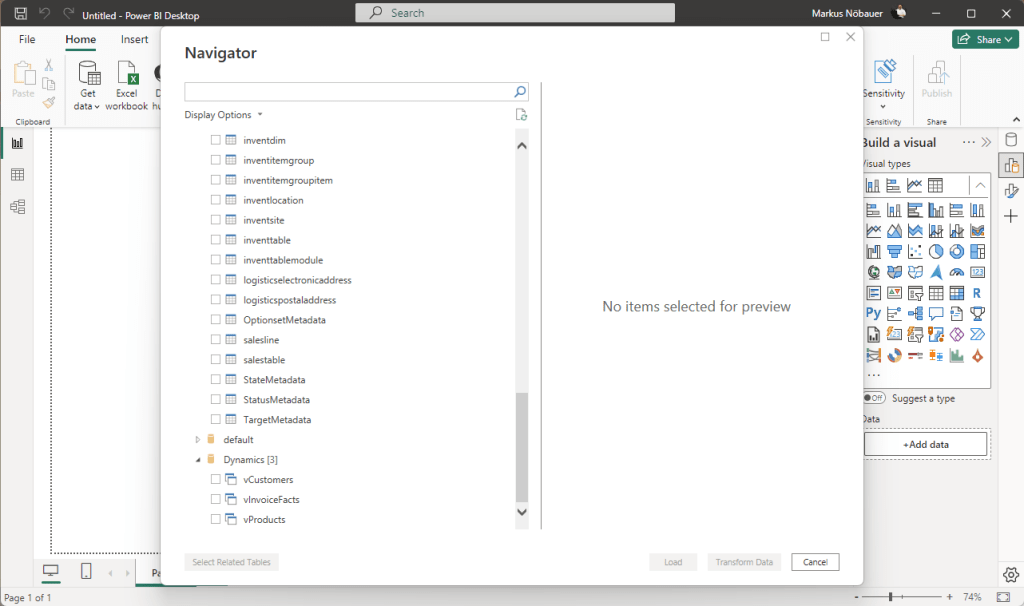

Next use the DataAreaId and KeyFieldValue1 to lookup the Sales Order in Dynamics 365 FSCM by combining both values seperated with a comma e.g. demf,001234

Add a second condition to check if there is an Email address provided in the sales header. If so use the Send Email action to notify the customer. If required you may work on the FALSE condition and lookup the customer instead to find an email address.

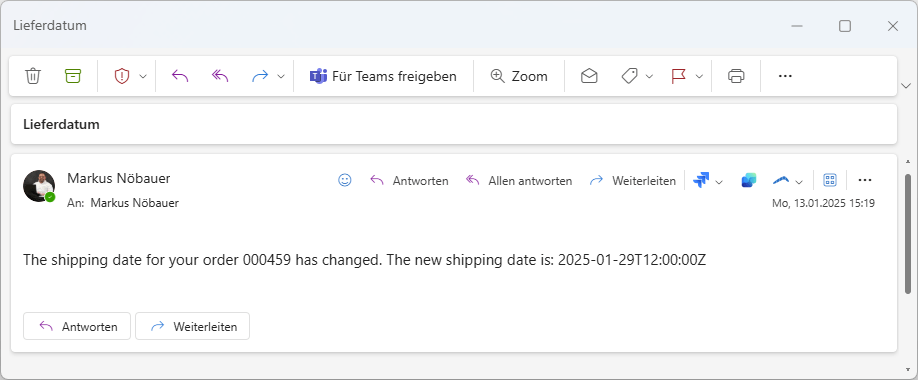

The final email may look like this